AMS/HUS Volume migration

Sometime, due to a number of different reasons, there might be a need to perform an undisruptive LUN migration from one DP pool to another. Thankfully it’s trivially easy to do with AMS/HUS Hitachi’s storage arrays:

- First, enable Volume Migration if this option is still off:

- Create a new LUN that will be used as a destination:

- Create a special LUN called DMLU (Differential Management Logical Unit) which will be keeping the differential data during migration. Please not that this LUN can’t be less than 10GB and full capacity mode could not be enabled for it:

- Start LUN migration from source (LUN 1) to destination LUN (LUN 2) that we’ve created recently:

- Basically, we’ve just paired two LUNs together and started the copy from one to another. To see the progress run the following command:

- Once the copy is over, the pair could be split and since the LUN’s id where the data live doesn’t change, the destination LUN, in our example that was LUN 2, could be deallocated and freed:

auopt -unit array_name -option VOL-MIGRATION -st enable

auluadd -unit array_name -lu 2 -dppoolno 1 -size 1468006400 -widestriping enable -fullcapacity enable

auluadd -unit array_name -lu 3 -dppoolno 1 -size 70G -widestriping enable audmlu -unit array_name -set -lu 3

aumvolmigration -unit array -add -lu 2 aumvolmigration -unit array -create -pvol 1 -svol 2

aumvolmigration -unit array_name -refer

aumvolmigration -unit array_name -split -pvol 1 -svol 2

Just a reminder, to make the migration non disruptive the original LUN id must not change, and that’s why in the end the only LUN id that could be deleted is that we created during step 2 – LUN with id 2.

That’s it. Have fun and safe migrations.

Macbook pro and three consecutive led flashes

Just in case if you have Macbook Pro, mine is 15″ from 2008, and one day it stops working or shuts itself down shortly after you’ve logged into the system, don’t panic and don’t run into the closest Apple store to buy a new one. There is probably a solution. There are other symptoms of failure, e.g. it wouldn’t boot or chime, or the display would stay black even when you could here the fans spinnig. That all doesn’t metter – I had all of these with my laptop – as the only thing that could bring you hope is the following: take a look at the front light indicator and if it flashes three times in a row then pauses for a while and then anther three flashes repeatedly then try to replace your memory DIMMs.

Frankly speaking I don’t know the root cause behind that issue but it worked for me and once I replaced my 2x2GB Kingston memory DIMMs to the stock ones (2x1GB) the problem had gone.

Hope it saves you a couple of quids.

Update.

Below is the link to the official knowledge base article that describes that.

OpenIndiana

Finally, OpenIndiana has found its place in my VirtualBox environment. Very hope, that I would have enough time to play with it. Anyway, it’s nice to have it as a Solaris reference.

Is technical support worth its money? Not so sure.

I can’t stop wondering why the absolute majority of all support requests I or my colleagues have dealt with in the past were treated, let’s say, in a strange way. The vendor would fight, yes fight, very hard in attempt to defend itself and redirect the blame on the customer or another vendor if that is possible. Using any pretext.

The last such example that was closed a couple of days ago has been opened for more than 10 months. We raised a support ticket in January 2012 and during all that time a vendor was harping monotonically that there was a problem in SAN or we had a problem with the storage array. Eventually, they had acknowledged that the problem was on their side but they didn’t have a reasonable workaround or a patch for us. The only real solution we were provided is an upgrade to a major version. I wouldn’t worry so much if that had been a single example but there are plenty of similar cases and poor quality provided by the technical support personnel has become ridiculously common in our IT everyday life.

Work hard and it would pay off.

Surprisingly, how a long and productive day could turn all upside down and make me fill contended and refreshed even when the previous day brought a body of disappointment.

How to quickly fix osad failure to connect to the SpaceWalk server

If one day you noticed that you spacewalk client had started to spit at you with the following errors – just know that it’s very easy to fix.

2012/03/15 17:17:43 +04:00 29835 0.0.0.0: osad/jabber_lib.setup_connection(‘Connected to jabber server’, ‘spacewalk-server-name’)

2012/03/15 17:17:43 +04:00 29835 0.0.0.0: osad/jabber_lib.register(‘ERROR’, ‘Invalid password’)

- Stop spacewalk

- Connect to Postgresql and run this trivial sql

- Back to the cli and start Spacewalk processes back

rhn-satellite stop rm -f /var/lib/jabberd/db/*

delete from rhnPushDispatcher;

rhn-satellite start

Even after 30 you could be a good student

A week ago I received a Statement of Accomplishment of Cryptography course that proves that there is always a chance for a challenge and to learn something new. Don’t give up, strive to broad your vision and extend your personal knowledge base. Pluck and join the class of your choice at www.coursera.org

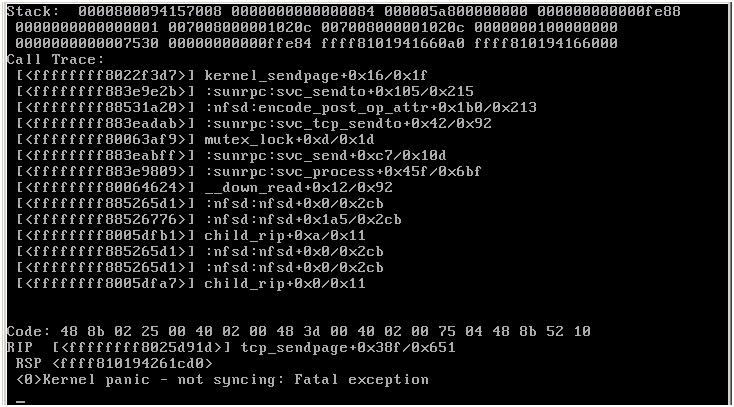

NFSD panics on RHEL 5.8

If you as unlucky as I am and your RHEL 5.8 server has just spat the same call trace as you see on the picture I attached, then I’m here to make your problem less painful. If you have RHN account you could find a thorough explanation and the root case here and here

If you don’t have any than you would find the answer below:

Root Cause

The rq_pages array has 1MB/PAGE_SIZE+2 elements. The loop in svc_recv attempts to allocate sv_bufsz/PAGE_SIZE+2 pages. But the NFS server is setting sv_bufsiz to over a megabyte, with the result that svc_recv may attempt to allocate sv_bufsz/PAGE_SIZE+3 pages and run past the end of the array, overwriting rq_respages.

Resolution

echo 524288 >/proc/fs/nfsd/max_block_size

Note this has to be done after mounting /proc/fs/nfsd, but before starting nfsd. It is recommended this change be made via modprobe.conf.dist as follows:

# grep max_block_size /etc/modprobe.d/modprobe.conf.dist

install nfsd /sbin/modprobe --first-time --ignore-install nfsd && { /bin/mount -t nfsd nfsd /proc/fs/nfsd > /dev/null 2>&1 || :; echo 524288 > /proc/fs/nfsd/max_block_size; }

Just boring

The title says it all. Nothing is happening at all and I feel stuck in the dead calm. Below are the most noticeable events that have taken part recently:

- The first part of Crypto class is over with the next portion expected to be offered somewhere during the autumn.

- Finally switched from iPhone to Google Galaxy Nexus and I have no regrets about that. This new device is really cool and I must admit that ICS has brought Android to an absolutely different level.

- A week ago my Mackbook Pro 15″ waved goodbye, switched off and every time when I tried to start it welcomed me with an absolute silence and a flashing moon-colored light from its front. Only today I returned it from AASP where it had a logical board replaced. I was hopping that I had became another witness of TS2377 and was eligible for a free replacement but unfortunately it wasn’t a problem with NVIDIA GeForce 8600M GT, so I had to fork out quite substantial sum of money. Anyway, it was much better solution than buying a new one. Hope my silver friend would be with me for another couple of years.

How to remove the last dead path in ESX

Feb 9 10:56:27 esx vmkernel: 47:18:55:03.308 cpu8:4225)WARNING: NMP: nmp_DeviceRetryCommand: Device "naa.60060e80056e030000006e030000004b": awaiting fast path state update for failover with I/O blocked. No prior reservation exists on the device. Feb 9 10:56:27 esx vmkernel: 47:18:55:03.308 cpu8:4225)WARNING: NMP: nmp_DeviceStartLoop: NMP Device "naa.60060e80056e030000006e030000004b" is blocked. Not starting I/O from device. Feb 9 10:56:28 esx vmkernel: 47:18:55:04.323 cpu8:4264)WARNING: NMP: nmpDeviceAttemptFailover: Retry world failover device "naa.60060e80056e030000006e030000004b" - issuing command 0x41027f458540 Feb 9 10:56:28 esx vmkernel: 47:18:55:04.323 cpu8:4264)WARNING: NMP: nmpDeviceAttemptFailover: Retry world failover device "naa.60060e80056e030000006e030000004b" - failed to issue command due to Not found (APD), try again...

If these log lines are familiar and you’ve been desperately trying to remove the last FC path to your storage array, which is by a awry coincedence is also dead, then probably the following command could help you in dealing with the issue:

# esxcli corestorage claiming unclaim -A vmhba1 -C 0 -T 3 -L 33 -d naa.60060e80056e030000006e030000004b -t location # esxcfg-scsidevs -o naa.60060e80056e030000006e030000004b

Just don’t copy/paste it boldly but instead use your C:T:L and naa.* values appropriately.

Worked for me like a charm.